VLSOT

Monocular-Video-Based 3D Visual Language Tracking

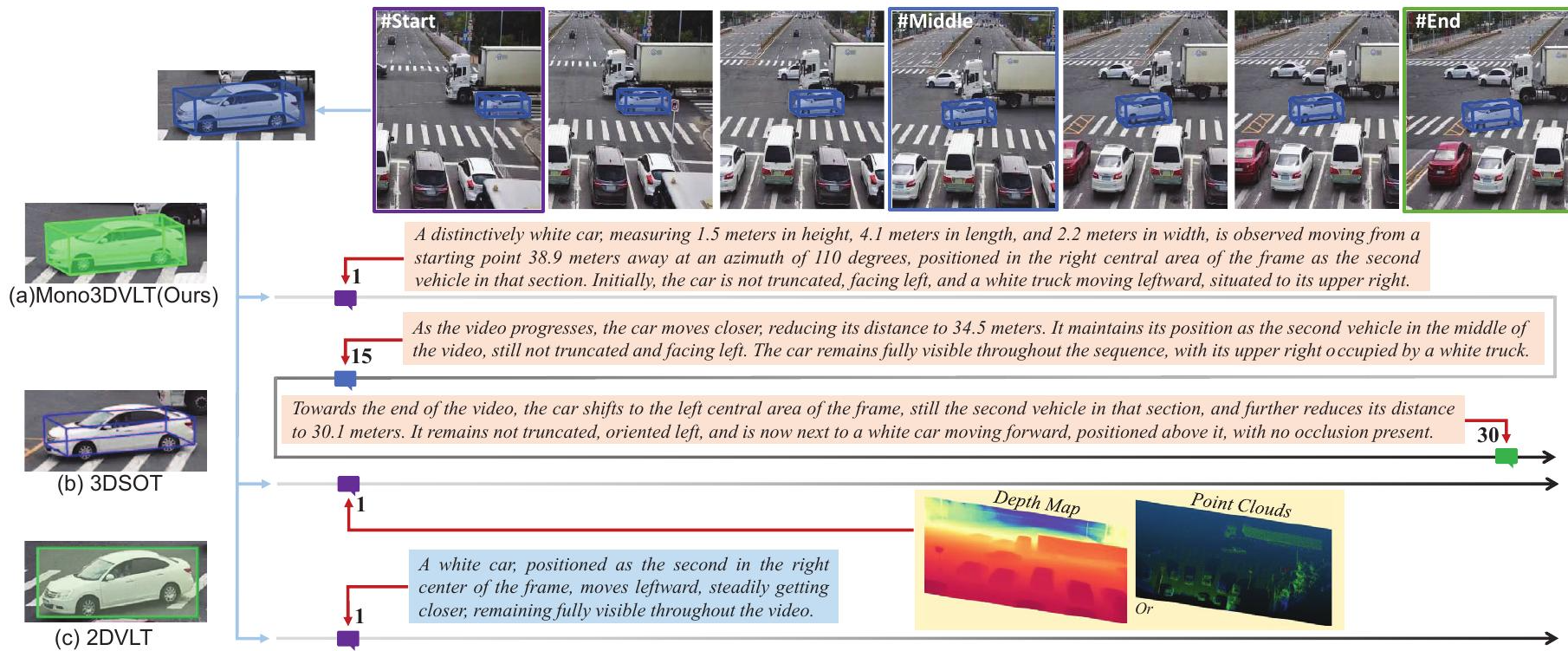

- Visual-Language Tracking (VLT) is an emerging paradigm that bridges the human-machine performance gap by integrating visual and linguistic cues, extending single-object tracking to text-driven video comprehension.

- However, existing VLT research remains confined to 2D spatial domains, lacking the capability for 3D tracking in monocular video—a task traditionally reliant on expensive sensors (e.g., point clouds, depth measurements, radar) without corresponding language descriptions for their outputs.

- The code are publicly available (https://github.com/astudyber/Mono3DVLT), advancing low-cost monocular 3D tracking with language grounding.

Submission Guidelines

Mono3DVLT-V2X Dataset : By applying, you can obtain the download link for the annotation file corresponding to the dataset. To avoid infringement, please obtain the images corresponding to the dataset from the V2X-Seq dataset: "V2x-seq: A large-scale sequential dataset for vehicle-infrastructure cooperative perception and forecasting." You can also download it through the following link: https://drive.google.com/file/d/1VwiXsNXHAeeCT5STIPesWX2w3UT-6x7B/view?usp=sharing

3D Object Detection Prediction File Format Specification

1. File Naming Rule

The prediction files must be named according to the following format:

{sampleID}_pred_box0_.txt

Example:

sample001_pred_box0_.txt, car_003_pred_box0_.txt

2. File Content Format

Each file should contain 30 lines, with each line representing the prediction result for one frame:

1 l w h x y z 2 l w h x y z 3 l w h x y z ... 30 l w h x y z

Format Description

| Column | Content | Description |

|---|---|---|

| 1st Column | 1–30 |

Frame index (from 1 to 30) |

| 2nd–7th Columns | l w h x y z |

3D bounding box coordinates |

3. Coordinate Requirements

- Data Type: Floating-point numbers, preferably with four decimal places

- Unit: Meters (m)

- Coordinate System: 3D world coordinate system

Note: Use a period (

.) as the decimal separator. Do not use commas (,).

4. Example File Content

1 0.0413 0.0061 0.3771 0.4055 -0.2095 0.2607 2 0.0421 0.0060 0.3745 0.4040 -0.2057 -0.2586 3 0.0423 0.0060 0.3760 -0.4028 -0.2031 0.2531 4 0.0434 0.0060 0.3736 0.4024 0.2010 -0.2514 5 0.0441 0.0060 0.3724 -0.4011 0.1982 -0.2453 ... 30 0.0605 0.0056 0.3588 0.3789 -0.1567 -0.1814

5. Folder Structure

The submitted prediction results should be organized as follows:

result/

├── 000237.txt

├── 001483.txt

├── 003235.txt

└── ...

├── 000237.txt

├── 001483.txt

├── 003235.txt

└── ...

Recommendation: Compress the entire folder into a ZIP file before submission. Example:

submission_result.zip

Method Leaderboard

12 Methods

6 Metrics

This leaderboard shows methods that are online and have submitted results. Methods are ranked based on their performance metrics.

| Method | SR@0.5 Higher is better | SR@0.7 Higher is better | AOR Higher is better | PR@1.0 Higher is better | ACE Lower is better | PR@0.5 Higher is better |

|---|---|---|---|---|---|---|

|

Mono3DVLT-MT

Last submission: 2025-08-07

|

81.6300 | 68.9400 | 85.1200 | 81.5600 | 0.5210 | 62.3600 |

|

Mono3DVG-TR

Last submission: 2025-08-31

|

71.7500 | 63.4700 | 79.1300 | 75.8900 | 0.5940 | 58.9100 |

|

JointNLT+backproj

Last submission: 2025-08-31

|

61.4000 | 51.7000 | 68.3100 | 70.4300 | 0.6970 | 53.6300 |

|

MMT3DVG

Open Source

Last submission: 2025-11-24

|

60.6667 | 42.6667 | 52.2601 | 40.3333 | 1.7716 | 15.3333 |

|

TransVG+backproj

Last submission: 2025-08-31

|

54.5000 | 41.6200 | 58.6200 | 66.7800 | 0.7830 | 47.5600 |

|

ReSC+backproj

Last submission: 2025-08-31

|

50.3300 | 38.4100 | 53.4300 | 68.5200 | 0.7920 | 47.5600 |

|

ZSGNet+backproj

Last submission: 2025-08-31

|

37.2900 | 25.3700 | 40.6900 | 37.4100 | 1.1320 | 16.3000 |

|

FAOA+backproj

Last submission: 2025-08-31

|

35.4300 | 29.6200 | 41.1800 | 43.4000 | 1.0590 | 25.3200 |

|

Hardcoded

Last submission: 2025-11-24

|

0.0000 | 0.0000 | 0.0000 | 0.0000 | 41.4169 | 0.0000 |

|

对对队的

Open Source

Last submission: 2025-11-24

|

0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

|

对对队

Open Source

Last submission: 2025-11-24

|

0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

|

UAVision

Open Source

Last submission: 2025-11-24

|

0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |