UAV-Crack

UAV-based pavement crack segmentation

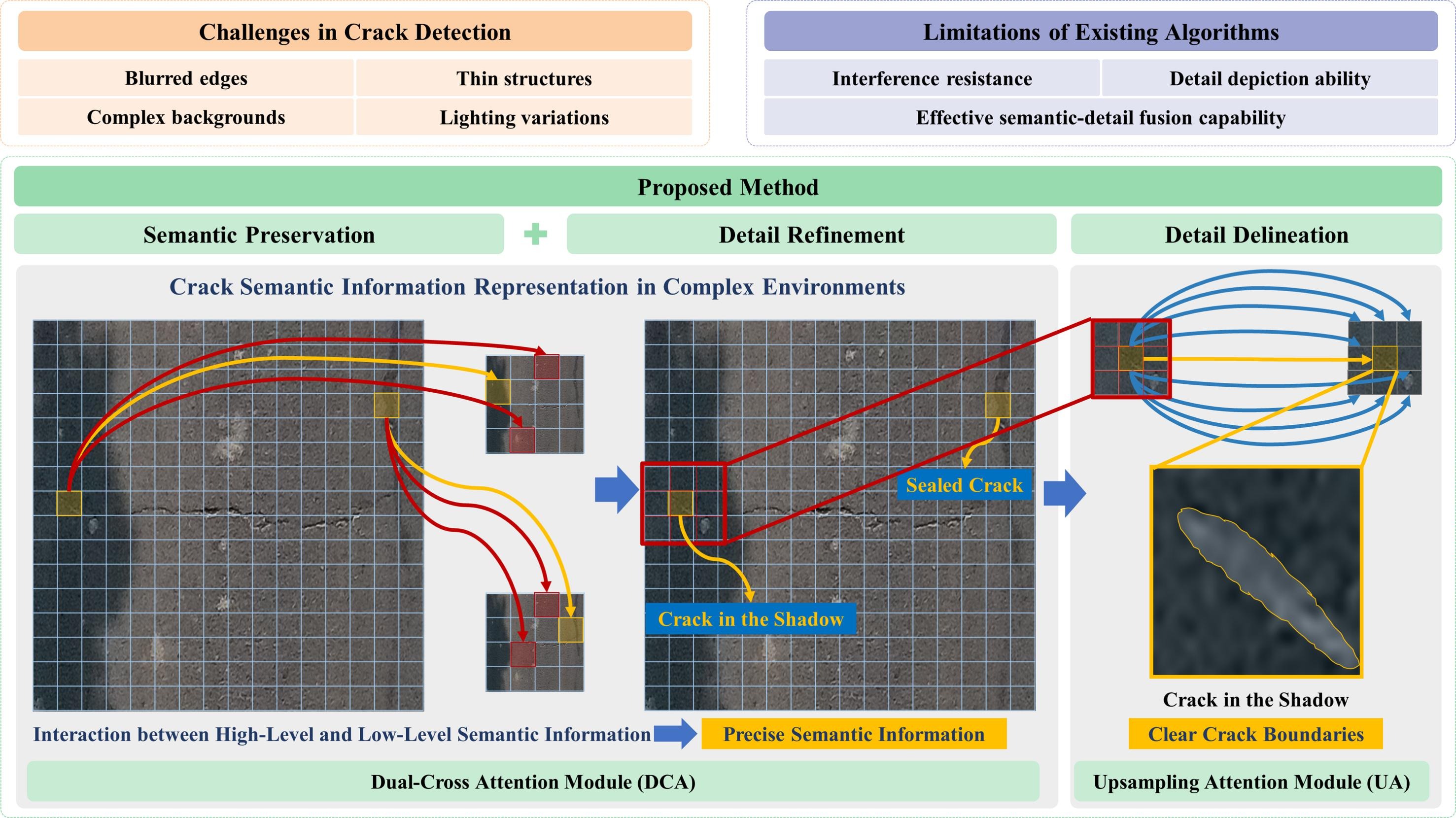

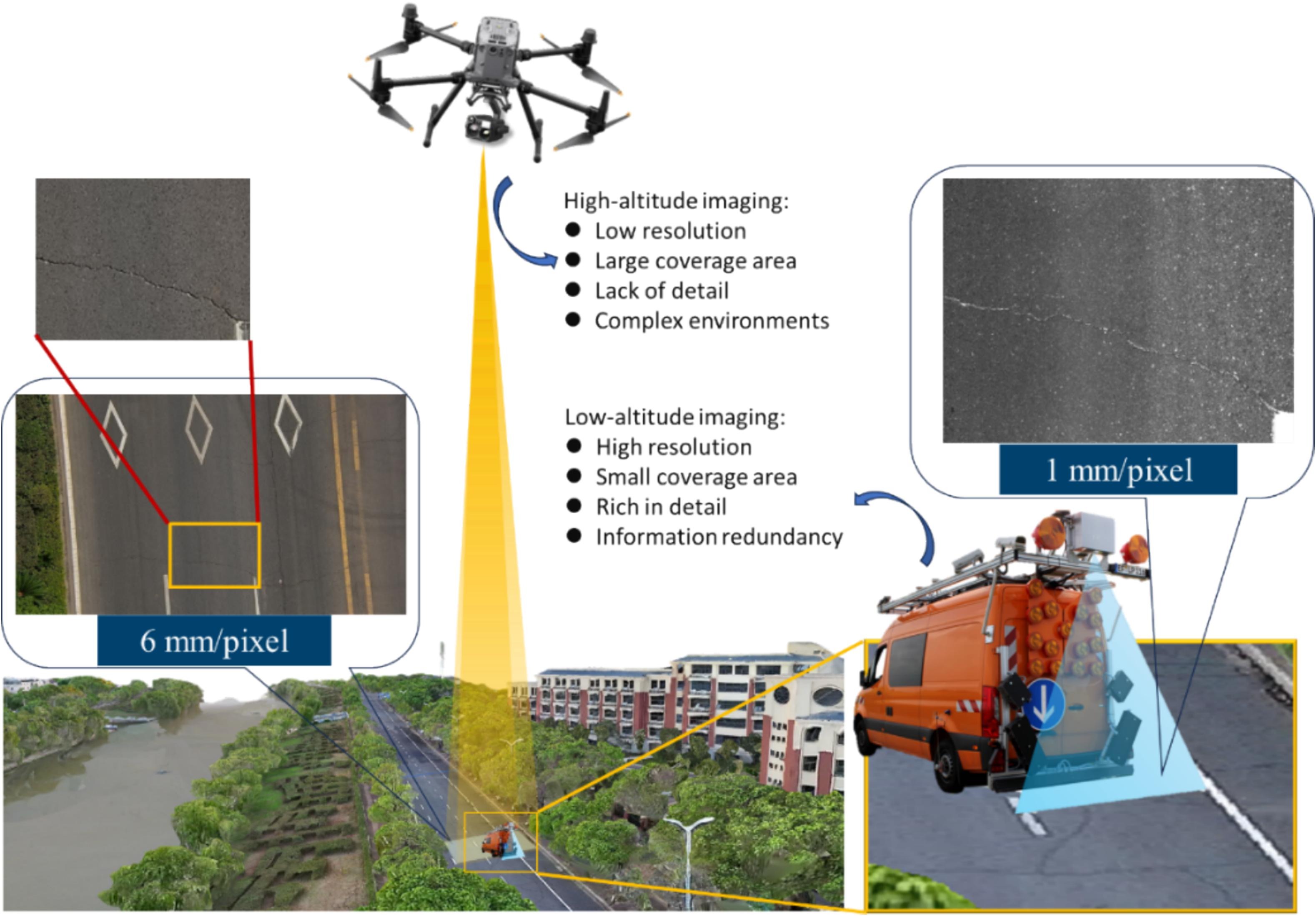

- Acquiring pavement distress images with UAVs faces unique challenges compared to ground-based methods due to differences in camera setups, flight parameters, and lighting conditions. These factors cause domain shifts that reduce the generalizability of segmentation models.

- A dedicated dataset, UAV-CrackX, was created with 1500 pixel-wise annotated UAV images to support model development.The dataset includes three subsets—UAV-CrackX4, X8, and X16—with 500 images each at 4×, 8×, and 16× zoom levels.

- Original high-resolution images (2688 × 1512) were split into 16 patches (672 × 378 pixels) for efficient processing. Reference baseline model: https://github.com/open-mmlab/mmsegmentation/tree/main

Submission Guidelines

UAV-Crack Benchmark Evaluation

1. Evaluation Data Format

After unzipping the dataset you received, you will find the following folder structure:

- gtFine folder: Contains binary mask images output by your model.

Pixel value0represents normal road surface,1represents cracks. - train subfolder (inside gtFine): Includes binary images at 3 scales: X4, X8, X16, all with resolution 672×378.

- leftimg8bit folder: Contains input images (original road images).

- train subfolder (inside leftimg8bit): Input images at 3 scales: X4, X8, X16, resolution 672×378.

- val subfolder (inside leftimg8bit): Contains 300 validation images for testing, also 672×378.

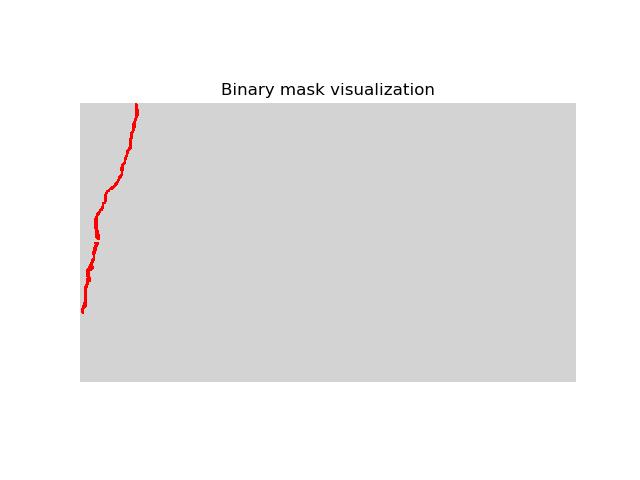

- showgt.py: A visualization script to display binary masks more intuitively (not used in evaluation).

Example of Model Input Image (.jpg):

Example of Model Output Binary Mask (.png):

Example of Visualized Binary Mask using showgt.py:

Benchmark Metrics Introduction:

- "mIoU": Mean Intersection over Union

- "Crack F1": Harmonic mean of Precision and Recall

- "Crack IoU": Intersection over Union for crack class

- "Crack Precision": Precision for crack detection

- "Crack Recall": Recall for crack detection

- "aAcc": Overall Accuracy (Average Accuracy)

2. Evaluation Result Submission Format

We have selected 300 images from the leftimg8bit/val/ folder for evaluation. You are required to submit your model’s predicted binary crack masks for these images only.

Submission Requirements:

- 1. The resolution of your predicted binary images must be the same as the input, which is 672×378.

- 2. The file names of your predicted images must exactly match the input image names (but with

.pngextension). - 3. Note: Input images are in .jpg format, but your output predictions must be in .png format.

How to Submit:

- Please compress all 300 predicted binary mask images (.png) into a single zip file.

- The zip file should be named:

result.zip

The submitted prediction results should be organized as follows:

result/

├── 937956_DJI_20231015154707_0002_Z.JPG_12_z.png

├── 937956_DJI_20231015154713_0005_Z.JPG_5_z.png

├── 937956_DJI_20231015154717_0007_Z.JPG_7_z.png

└── ...

├── 937956_DJI_20231015154707_0002_Z.JPG_12_z.png

├── 937956_DJI_20231015154713_0005_Z.JPG_5_z.png

├── 937956_DJI_20231015154717_0007_Z.JPG_7_z.png

└── ...

Important: Do not include any extra files or images in the submission zip. Only include the 300 required predicted mask images. Store 300 mask images in a folder named 'result'. This ensures correct evaluation.

Method Leaderboard

22 Methods

6 Metrics

This leaderboard shows methods that are online and have submitted results. Methods are ranked based on their performance metrics.

| Method | mIoU Higher is better | Crack F1 Higher is better | Crack IoU Higher is better | Crack Precision Higher is better | Crack Recall Higher is better | aAcc Higher is better |

|---|---|---|---|---|---|---|

|

Aero裂鉴队

Last submission: 2025-11-24

|

80.1000 | 77.2600 | 62.9500 | 78.8100 | 75.7700 | 97.3800 |

|

照隙镜2.0

Last submission: 2025-11-22

|

80.0300 | 77.1200 | 62.7600 | 80.2000 | 74.2700 | 97.4100 |

|

CrackNet-Pro

Last submission: 2025-11-24

|

79.6500 | 76.5800 | 62.0500 | 80.4200 | 73.0900 | 97.3700 |

|

Hello,Crack!

Open Source

Last submission: 2025-11-24

|

79.6400 | 76.7900 | 62.3200 | 72.5700 | 81.5300 | 97.1000 |

|

UAVCRACK-trying1

Last submission: 2025-11-24

|

79.4100 | 76.2000 | 61.5500 | 81.7200 | 71.3700 | 97.3800 |

|

RBB

Last submission: 2025-11-22

|

79.4000 | 76.2700 | 61.6400 | 78.7200 | 73.9600 | 97.2900 |

|

C1 enhanced

Last submission: 2025-11-23

|

79.2200 | 75.9300 | 61.2000 | 81.2300 | 71.2800 | 97.3400 |

|

照隙镜

Last submission: 2025-11-18

|

79.1800 | 75.8700 | 61.1300 | 81.5500 | 70.9400 | 97.3500 |

|

UAV-CrackSeg

Open Source

Last submission: 2025-11-16

|

78.7600 | 75.4400 | 60.5700 | 75.0200 | 75.8700 | 97.1000 |

|

UAV-A-MODEL

Last submission: 2025-11-12

|

78.4200 | 74.8800 | 59.8400 | 76.9200 | 72.9500 | 97.1200 |

|

UAV-B-MODEL

Last submission: 2025-11-16

|

78.3000 | 74.6800 | 59.5900 | 78.1200 | 71.5300 | 97.1500 |

|

UAV11111

Last submission: 2025-11-18

|

77.7300 | 73.8400 | 58.5300 | 77.5600 | 70.4600 | 97.0700 |

|

dfanet

Last submission: 2025-11-18

|

75.4300 | 70.2200 | 54.1100 | 79.6800 | 62.7700 | 96.8700 |

|

kun

Last submission: 2025-11-18

|

74.4300 | 69.5100 | 53.2700 | 60.6200 | 81.4600 | 95.8000 |

|

破晓-UAV_deep

Last submission: 2025-11-24

|

74.1100 | 68.9400 | 52.6000 | 61.1100 | 79.0700 | 95.8100 |

|

熊熊上分队

Last submission: 2025-11-24

|

72.6400 | 65.6100 | 48.8200 | 79.8800 | 55.6700 | 96.5700 |

|

Uav-deep

Last submission: 2025-11-24

|

72.0700 | 66.6700 | 50.0000 | 51.5500 | 94.3400 | 94.4600 |

|

UAV-Crack1

Last submission: 2025-11-25

|

59.4200 | 42.7200 | 27.1700 | 36.6400 | 51.2300 | 91.9300 |

|

pcd

Last submission: 2025-11-23

|

49.9300 | 3.3400 | 1.7000 | 4.4000 | 2.6900 | 98.1700 |

|

UC

Last submission: 2025-11-17

|

48.4600 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 96.9300 |

|

U

Last submission: 2025-11-24

|

48.1300 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 96.2700 |

|

Unet_crack

Last submission: 2025-11-25

|

0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |