AesBench

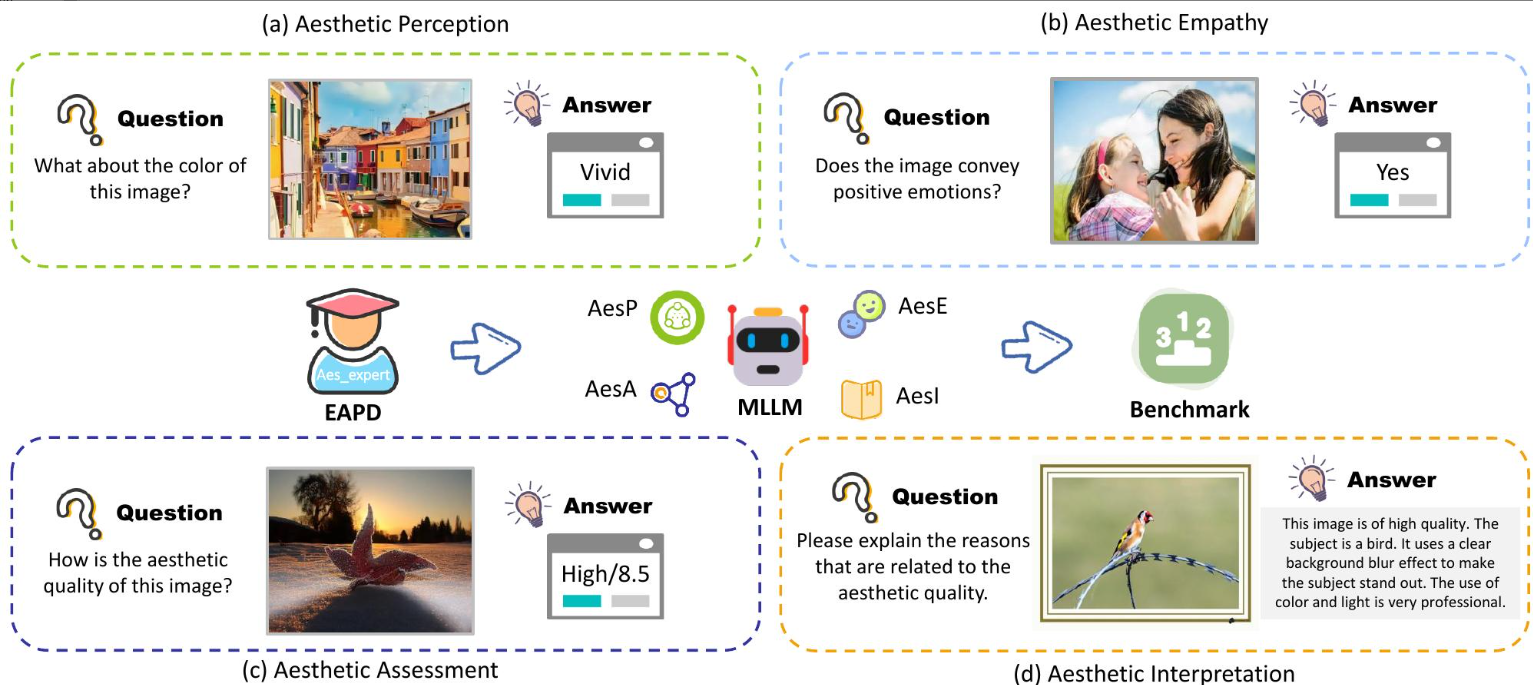

Multimodal Large Language Models on Image Aesthetics Perception

- With the rapid development of multimodal large language models (MLLMs), they have shown great potential in human-computer interaction and daily collaboration.

- However, in the important field of aesthetic perception of images, the ability of MLLMs is still unclear, and this ability is crucial for practical applications such as art design and image generation. We hope to inspire further exploration of the aesthetic potential of MLLMs images in academia and industry through AesBench, and make relevant source data public to promote further development in this field.

- Our code are available at https://github.com/yipoh/AesBench

Submission Guidelines

Aesthetic Benchmark Evaluation

1. Evaluation Data Format

After unzipping the dataset you received, you will find:

- images folder: Image inputs for your model

- AesBench_evaluation.json: Text inputs for your model

The JSON file contains test data for 2800 photos. A single sample example is shown below:

{

"xxx.jpg": {

"AesP_data": {

"Question": "xxx?",

"Options": "A) xx\nB) xx\nC) xx\nD)xx"

},

"AesE_data": {

"Question": "xxx?",

"Options": "A) xx\nB) xx\nC) xx"

},

"AesA1_data": {

"Question": "xx?",

"Options": "A) High\nB) Medium\nC) Low"

},

"AesI_data": {

"Question": "xxx"

}

}

}

Benchmark Metrics Introduction:

- AesP: Aesthetic Perception

- AesE: Aesthetic Empathy

- AesA1: Aesthetic Assessment

- AesI: Aesthetic Interpretation

2. Evaluation Result Submission Format

We have selected a portion of photos for evaluation. You only need to submit prediction results for the corresponding images.

We evaluate your model structure from three perspectives: AesP, AesE, and AesA1.

The submitted file should be named result.json. Your model's predicted results must strictly follow the example structure below:

{

"baid_18347.jpg": {

"AesP": "D) Because it employs an S-shaped composition with natural lighting and an elegant cyan main color tone",

"AesE": "B) Because the image portrays a serene environment and the woman's appearance is aesthetically pleasing.",

"AesA1": "high"

},

...

"para_iaa_pub10205_.jpg": {

"AesP_GT": "C) The image appears dim and the overall tone fails to match the joyful atmosphere typically associated with Christmas.",

"AesE_GT": "B) No",

"AesA1_GT": "medium"

}

}

3. Download Evaluation Image IDs

You only need to perform inference on the images listed in eval.txt. Finally, submit results that comply with the specifications.

Important: Do not submit additional results to avoid affecting the final evaluation metric calculation.Finally, you need to submit a zip format compressed file.

Each line in eval.txt is an evaluation image name. Download eval.txt here:

https://drive.google.com/file/d/1nKVP284yxxug_U36krTDMm_sEFy0yGFc/view?usp=sharing